PyNVMe3 Testing on Server

Last Modified: November 25, 2024

Copyright © 2020-2024 GENG YUN Technology Pte. Ltd.

All Rights Reserved.

PyNVMe3 is a powerful and comprehensive NVMe SSD testing tool that sets rigorous standards for extreme performance and high reliability under heavy stress scenarios for enterprise SSDs. It also supports test engineers in developing customized test cases using its Python API. As a result, PyNVMe3 has been widely adopted in the industry, becoming a professional NVMe SSD testing software platform. Over the past year, we have further refined our software and developed an extensive library of test scripts for enterprise NVMe SSDs, based on our reference desktop and server testing platform.

1. Hardware Platform

While PyNVMe3 is a software-defined testing platform, we have also selected several hardware platforms for in-depth tuning. Users can choose the hardware platform that best suits their testing needs and enjoy the best user experience.

1.1. Desktop Testing Platform

During the R&D phase of NVMe SSDs, desktops are commonly used as the primary testing platform. Below is the configuration of our desktop testing platform:

- Motherboard: Asus X670E ROG CROSSHAIR GENE (webpage)

- CPU: AMD Ryzen™ 5 7600X

- Memory: DDR5, 16GB x 2

- OS Drive: 2.5″ SATA SSD

This configuration provides excellent single-core performance, sufficient core count, and supports PCIe Gen5 and DDR5, meeting the requirements for enterprise NVMe SSD testing. Additionally, we have equipped the platform with multiple test fixtures to further enhance its testing capabilities.

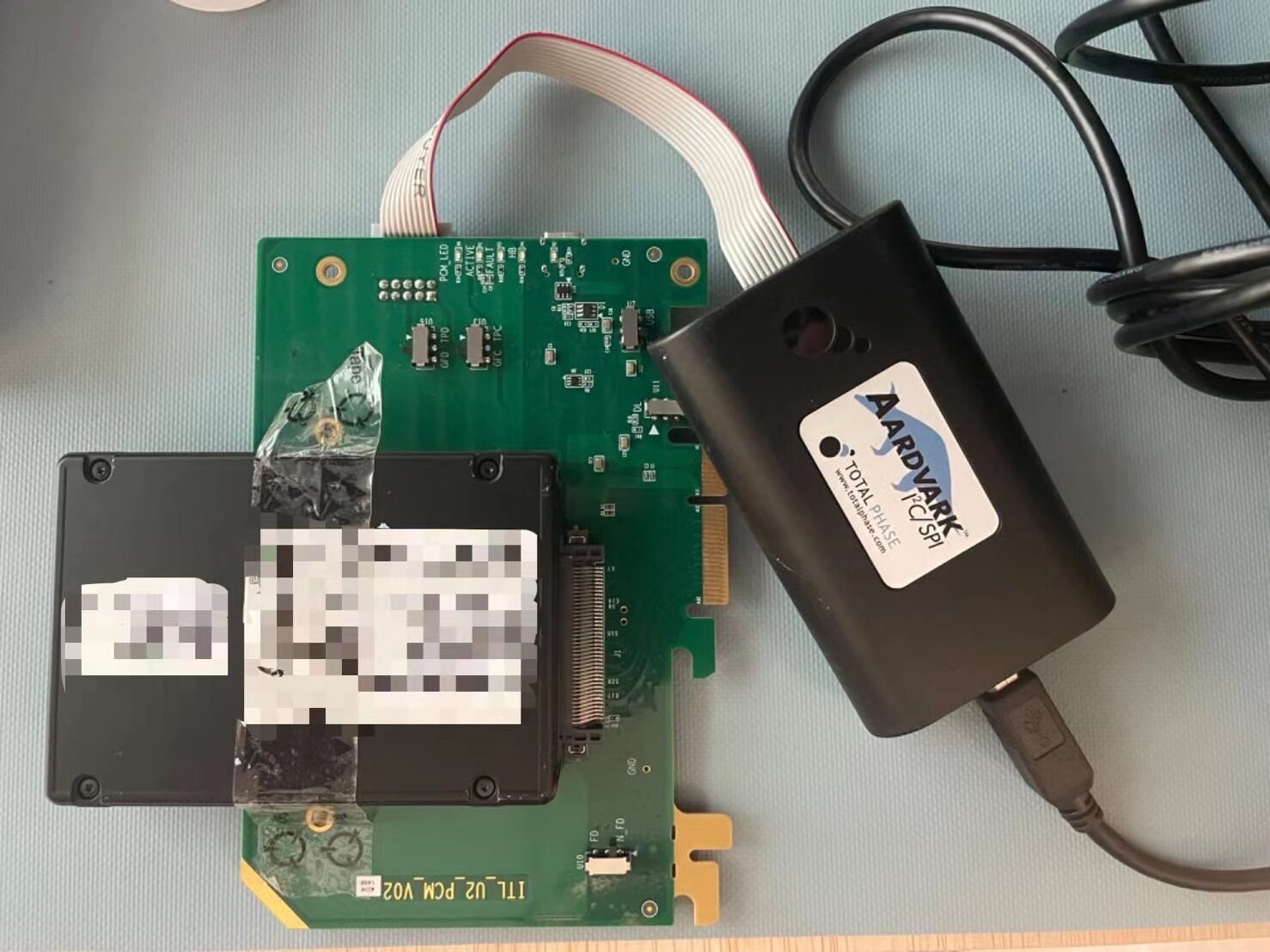

PMU2 Power Module, the green PCB board shown below, is equipped with a wide range of features essential for enterprise NVMe SSD testing:

- AIC-U.2 adapter.

- PCIe Gen5 support.

- Script-controlled main power switch.

- Script-controlled AUX power switch.

- SSD power consumption measurement.

- Compatibility with the Total Phase I2C module for MI (Management Interface) testing.

- An M.2 version is available, supporting all of the above functions.

- Support for EDSFF (E3.S/E1.S) series is coming soon.

The desktop testing platform now supports multiple PMU2 interposers, enabling the testing of multiple DUTs simultaneously. Users can assign a slot number to each PMU2 interposer in the slot.conf file. After the system boots, users must pair the PMU2 interposers and DUTs by sequentially unplugging and re-plugging the USB control cable for each PMU2 in the order of their assigned slot numbers. This process ensures accurate pairing between PMU2 interposers and DUTs in the slot.conf configuration, streamlining the setup for multi-device testing. This enhanced capability makes the desktop platform a powerful solution for testing multiple NVMe SSDs efficiently during the R&D phase.

Furthermore, Total Phase I2C Module offers an affordable, flexible and reliable NVMe MI out-of-band test infrustrature.

This hardware platform offers high cost-effectiveness, making it suitable for daily development and testing by R&D personnel. PyNVMe3 has developed numerous protocol, stress, power cycling, power consumption, and out-of-band management test scripts on this platform.

1.2. General Server Testing Platform

While desktop testing platforms are convenient for daily use by R&D personnel and cover a wide range of tests, their testing density and efficiency are limited. To improve the efficiency of routine testing, general servers can be utilized, such as the Asus RS520A-E12-RS24U.

This 2U general server, equipped with an AMD CPU, supports PCIe Gen5 and can test up to 24 U.2 NVMe SSDs simultaneously, providing exceptional testing density and cost-effectiveness. The specific configuration is as follows:

- CPU: 1 x AMD EPYC 9254 (24C/48T, 2.9GHz, 128MB L3 Cache)

- Memory: 4 x 16GB DDR5-4800 ECC RDIMM

- OS Drive: 2 x 2.5″ SATA SSD

General server platforms cannot connect to external test fixtures, so their testing coverage is not as extensive as desktops. In actual R&D processes, combining server and desktop testing platforms strikes the right balance between testing efficiency and coverage.

Selection and Deployment Recommendations

PyNVMe3 customers can choose and deploy their own servers. Below are key recommendations for selecting and optimizing server hardware:

- U.2 Slots:

To improve cooling efficiency during testing, SSDs can be inserted alternately, leaving more space for airflow. While this reduces the number of active test drives, it significantly enhances cooling efficiency and ensures stable operation under heavy workloads. Each U.2 slot should support PCIe Gen5 and be directly connected to the CPU to minimize latency. - CPU Cores:

Users can allocate 3-6 CPU cores per DUT, depending on the workload. PyNVMe3’s NVMe driver has been fully optimized, achieving over 60% higher single-core IOPS performance than the Linux kernel driver. This optimization allows fewer CPU cores to handle higher workloads, reducing the overall CPU requirements. - Memory:

PyNVMe3’s CRC table requires a large amount of DRAM. For example, an 8TB SSD with an LBA size of 512 bytes requires 16GB DRAM. It is recommended to equip 128GB DDR5 memory or more for testing larger-capacity drives or multiple DUTs simultaneously. Additionally, ensure an adequate number of memory channels to prevent memory bandwidth from becoming a bottleneck for SSD sequential read performance.

Optimizing Multi-Socket Server Platforms

For multi-socket servers, ensuring proximity between CPU, DUT, and memory is essential to maximize performance and efficiency. Below are key considerations when deploying PyNVMe3 on NUMA-based platforms:

- CPU-to-DUT Affinity:

Assign each DUT to the PCIe slot directly connected to the nearest CPU socket. This minimizes latency and ensures that I/O operations are handled efficiently by local CPU cores, avoiding unnecessary cross-socket communication. PyNVMe3 simplifies this process by allowing users to assign specific CPU cores to DUTs in theslot.conffile. - Memory Allocation:

PyNVMe3 allocates memory to DUTs from the same NUMA node as the CPU managing the DUT. This ensures optimal memory bandwidth and minimizes latency, as accessing memory from a remote NUMA node can degrade performance. - Balanced Resource Distribution:

Distribute DUTs evenly across CPU sockets to prevent overloading a single socket. For example, in a server with 24 U.2 slots and two CPU sockets, assign 12 DUTs per socket. This balance helps maintain consistent CPU core usage, memory bandwidth, and PCIe resource availability across the platform.

1.3. Customized Server Testing Platform

Based on our experience with different testing platforms, we have developed a customized server testing platform in collaboration with our partners.

This customized server uses the Intel Xeon platform, supporting 12 PCIe Gen5 U.2 slots. The standard 3U chassis provides more space and better cooling, and it can easily control the fan speed via software to achieve a high-temperature testing environment.

2. PyNVMe3 Software Platform

For long-term read/write tests on many test drives, we recommend using servers to test multiple SSDs simultaneously, improving testing efficiency. If you need to test power cycling, power consumption data, and out-of-band management functions, we recommend using the desktop testing platform. PyNVMe3 can be deployed and used on any of the above hardware platforms with a unified experience. Please refer to the User’s Guide.

2.1. Server Platform Configuration

PyNVMe3 has been enhancing the user experience on server platforms. Users can specify the CPU core number, BDF address, and test cases for each test slot in the slot.conf file. As shown in the example below:

# slot_N = BDF, CPU, TESTS

slot_0 = 0000:6f:00.0, 0, ./scripts/production/01_normal_io_test.py::test_case1_16k_randrw_1day

slot_1 = 0000:70:00.0, 2, ./scripts/production/01_normal_io_test.py::test_case1_16k_randrw_1day

slot_2 = 0000:46:00.0, 4, ./scripts/production/01_normal_io_test.py::test_case1_16k_randrw_1day

With the slot.conf configuration file, we can simplify the test execution command line as follows:

make test slot=1

This command line will locate the BDF address of the test drive, the corresponding CPU core number, and the test case to be executed based on the slot.conf configuration. We can even use the following command line to start tests on 12 SSDs simultaneously in the background tasks.

for slot in {0..11}; do nohup make test slot=$slot &; done;

Since starting tests on 12 drives simultaneously will create a large number of log files in the results directory, we recommend clearing the results directory before testing.

2.2. WebUI

When testing a single drive, we can monitor the log files to get the current test status. However, when testing many SSDs on multiple platforms, this method becomes inefficient. Therefore, we provide a professional WebUI to get the test status, performance, and other information of a specified platform and slot in the test cluster, as shown below.

This WebUI offers several significant benefits:

- Real-Time Monitoring:

- Through the WebUI, users can monitor all active DUTs (Devices Under Test) in real-time, including their slot numbers, BDF (Bus, Device, Function) addresses, and model names. This allows users to quickly and easily obtain the current status and location information of each SSD, eliminating the need for manual searching and recording.

- Performance Charts:

- The WebUI provides detailed performance charts showing IOPS (Input/Output Operations Per Second) and throughput (in MB/s or GB/s) over a specified time range. Users can use these charts to rapidly assess the performance of SSDs and identify potential performance bottlenecks or anomalies.

- Comparison Feature:

- The WebUI allows users to select and compare the performance of two DUTs. This is particularly useful for evaluating performance differences between different SSD models or the same model under different test conditions.

- Detailed Information:

- In addition to performance metrics, the WebUI provides other detailed information about the selected DUT, such as firmware version, temperature, and health status. This enables users to gain a comprehensive understanding of the operating condition and potential issues of each SSD.

- Custom Monitoring Functions:

- The WebUI allows users to define their own monitoring functions. PyNVMe3 will periodically collect data based on these custom functions and display it on the WebUI. This feature enables users to tailor the monitoring process to their specific needs and preferences, ensuring that the most relevant data is always at their fingertips.

- Cluster-Wide Monitoring:

- The WebUI allows users to specify which machines in the entire test cluster to monitor. These machines can be servers, desktops, or even laptops. This flexibility ensures that users can centralize the monitoring and management of all relevant devices, regardless of their type or location within the cluster.

- No Impact on Existing Tests:

- Importantly, the addition of this WebUI does not impact the functionality or performance of existing tests. Users can continue to run their tests as usual, with the added benefit of enhanced monitoring and data visualization capabilities.

By utilizing this professional WebUI, users can significantly improve the efficiency and accuracy of SSD testing, reduce the time spent on manual operations and monitoring, and ensure comprehensive and visualized test data. This is especially important in environments where many SSDs are tested across multiple platforms, aiding in centralized management and optimization of the testing process.

3. Enterprise SSD Test Scripts

On the above software and hardware testing platforms, we have developed numerous enterprise SSD test scripts. Customers can directly test these scripts or use them as references to develop their own test script libraries. The following scripts can be found in the PyNVMe3/scripts directory.

| Test Category | Script Directory | Platform Requirements | Test Duration | Overview |

|---|---|---|---|---|

| Basic Protocol | conformance | Desktop Platform | 1-2 hours | Various protocol and function tests, including PCIe, NVMe, TCG, OCP, etc. |

| Out-of-Band Management | management | Desktop Platform | 1-2 hours | Comprehensive out-of-band management test scripts. |

| Flexible Data Placment | placement | Desktop Platform | 1-2 hours | Comprehensive FDP test scripts. |

| Other Features | features | Desktop Platform | 1-10 minutes | Various enterprise features test script examples as a basis for secondary development by users, including multi-Namespace, SGL, PI, ZNS, SRIOV, CMB, MSIx, etc. |

| Product Testing | production | Server and Desktop Platforms | 1-2 weeks | Long-term, high-stress test cases for verifying product performance and reliability when the product is relatively stable. |

Some test cases require controlling the power of DUT, so we need to use the power module test fixtures. If the test platform does not have the power module installed, the test will be skipped. Out-of-band management tests require the Total Phase I2C adapter. These tests need to be performed on the aforementioned desktop testing platform.

3.1. Product Testing

With the server testing platform, we can significantly increase the scale and duration of the tests. We refer to this type of large-scale, long-duration testing as “Product Testing.” Product testing focuses on checking performance and reliability, including:

- Simulation of various common application scenarios

- Various extreme usage patterns targeting NAND characteristics and limitations

- High-stress tests involving a mix of different IO types

- Testing various FTL scenarios such as wear leveling, garbage collection, error handling, etc.

- Power cycling tests

- JEDEC workload tests

Each product test file requires 1-3 weeks of testing duration, but we can increase the level of concurrency by adding more DUTs, executing different test cases simultaneously. It is also possible to test SSDs from multiple vendors on the same server and compare the results.

3.2. Test Suites

| Suite | Test |

|---|---|

| Conformance | |

| Admin | |

| IO | |

| Reset | |

| Power | |

| Register | |

| Controller | |

| TCG | |

| Production | |

| 01_normal_io_test.py | |

| 02_mix_io_test.py | |

| 03_data_model_test.py | |

| 04_trim_format_test.py | |

| 05_small_range_test.py | |

| 06_jesd_workload_test.py | |

| 07_power_cycle_test.py | |

| 08_io_stress_test.py | |

| 09_wl_stress_test.py | |

| Management | |

| 01_mi_inband_test.py | |

| 02_basic_mgmt_cmd_test.py | |

| 03_mi_cmd_set_test.py | |

| 04_mi_admin_cmd_test.py | |

| 05_mi_control_primitive_test.py | |

| 06_mi_pcie_cmd_test.py | |

| 07_mi_feature_test.py | |

| 08_mi_error_inject_test.py | |

| 09_mi_stress_test.py | |

| Placement (FDP) | |

| 01_basic_test.py | |

| 02_logpage_test.py | |

| 03_features_test.py | |

| 04_io_test.py | |

| 05_directive_test.py | |

| 06_namespace_test.py | |

| 07_event_test.py | |

| 08_performance_test.py | |

| 09_stress_test.py | |

| Features | |

| CMB | |

| MPS | |

| MSIx | |

| Metadata | |

| PI | |

| NS | |

| SGL |

The PyNVMe3 testing platform offers a comprehensive solution for enterprise SSD evaluations, combining robust software tools with versatile hardware support. Its extensive test scripts and professional WebUI enhance efficiency and precision in SSD testing, ensuring products meet the demanding standards of enterprise environments. This platform is integral for developers and engineers aiming to rigorously assess SSD performance and reliability.